Three.js Curator

Dual-Layer Bias Intelligence. An advanced NLP agent that goes beyond simple keyword filtering by combining a strict Sentiment Mapping Registry with a contextual Implicit Bias Framework. This system detects both explicit policy violations (slurs, hate speech) and subtle, systemic issues like attribute bias, double standards, and institutional gatekeeping—providing actionable, educational feedback for safer, more inclusive communication.

Stack: Gemini 3.0 Pro via Google Opal | Dual-Layer Reasoning (System 1 + System 2) | Chain-of-Thought Prompting | Contextual Data Retrieval | HTML/CSS Generation

The Curator

Who is this for?

This is for anyone who wants to check for possible bias in a given portion of text or a text document (the shorter, the better). There are two agents with nearly identical functions, though the validated version will normally yield more accurate results at the expense of additional time and model compute. Though you may upload large portions of text (or a full document), Google Gemini will take a very long time to process your request and return the relevant web page. If either agent yields an error, refresh and ask the agent to reprocess the request.

NOTE: Either agent takes its time as it processes the provided text, applying the provided bias framework and sentiment mappings to identify both explicit and implicit biases.

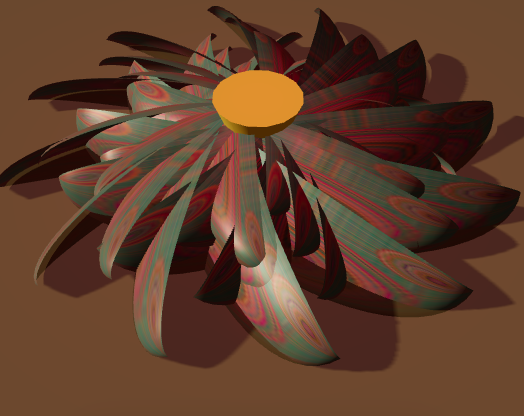

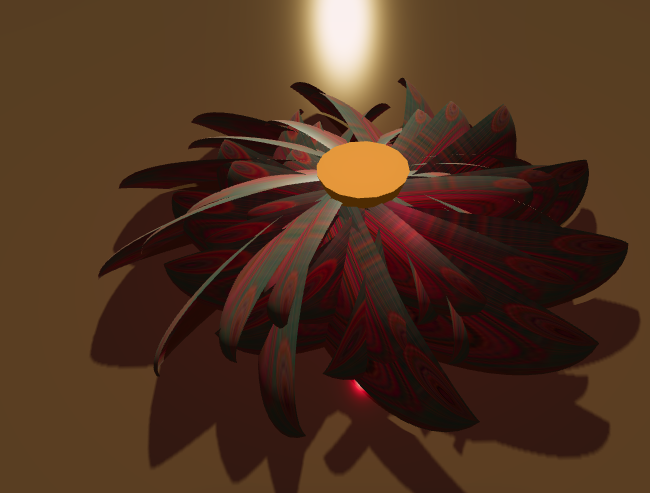

Persona 1 - The Curious Artist: Original | HTML

The Scenario: A curious digital artist found this application and realized that it could be used to sketch great ideas. To test out its applications, the artist drew up a mundane but romantic prompt. Satisfied with the results, they downloaded the generated HTML file to explore further.

Prompt: "Draw a blooming lotus flower floating on a still pond at sunset, using the photo I attached as its petals."

Evaluate - ?

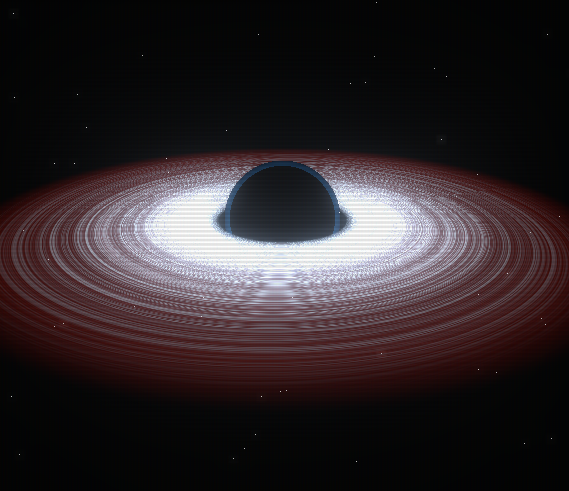

Persona 2 - The Passionate Astrophysicist: Original | HTML

The Scenario: An astrophysicist stumbled upon this application while looking through different use cases for Google Opal. Interested in the concept, they wanted to see if they could generate a supermassive black hole with specific details. After prompting, they realized that the result could be further customized and possibly integrated into modeling, with a bit of help from Claude Code.

Prompt: "A supermassive black hole surrounded by an accretion disk of superheated plasma, with gravitational lensing distorting the space around it. The environment should feel like deep space — cold, dark, and vast. Make it feel cinematic."

Evaluate - ?

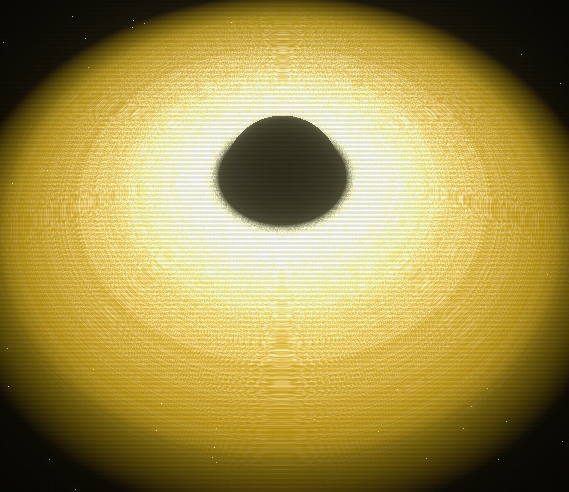

Persona 3 - The Silly Poet: Original | HTML

The Scenario: A silly poet found this application while they were bored. Honestly? They just wanted to waste time, so they wrote a silly prompt romanticizing a single soap bubble and, in dadaist fashion, gave it a personified narrative.

Prompt: "A single soap bubble floating in golden afternoon light, catching all the colors of a sunset on its surface. The floor should look like warm polished hardwood. Everything should feel peaceful and slightly nostalgic."

Evaluate - ?

Methodology: Dual-Layer Reasoning Architecture

The Bias Detector utilizes a hybrid cognitive architecture that prevents the common pitfalls of standard AI moderation. Instead of relying on a single pass, the system splits analysis into two distinct processing layers:

System 1 (Fast Path): Sentiment Mapping Registry. The input is first scanned against a deterministic registry of known hate speech, slurs, and coded language (derived from the DGHS and MLMA datasets). This ensures immediate, hallucination-free flagging of explicit policy violations.

System 2 (Slow Path): Counterfactual Inference Engine. For text that passes the explicit filter, the system engages a chain-of-thought "Flip Test." It mentally substitutes demographics (e.g., swapping "she" for "he") to detect tonal discrepancies, attribute bias, and double standards that keyword filters miss.

Contextual Grounding: All findings are validated against a custom Implicit Bias Framework (Section 2.3) to distinguish between harmless comments and institutional gatekeeping.

Technical Architecture: The Counterfactual Inference Engine

Detecting implicit bias requires more than just a list of banned words. It demands a system capable of semantic reasoning and counterfactual analysis. This tool implements a Dual-Process Cognitive Architecture to audit text for fairness and inclusion:

The Solution: This agent implements a Two-Stage Filtering Protocol, ensuring that every analysis is both fast (for obvious hate speech) and deep (for subtle discrimination):

Stage 1: Deterministic Sentiment Mapping (System 1):

Mechanism: Scans input against a curated High-Velocity Toxicity Registry derived from the Convabuse, MLMA, and DGHS datasets.

Purpose: Provides immediate, zero-latency flagging of slurs, hate symbols, and known dog-whistles.

Stage 2: Counterfactual "Flip Test" (System 2):

Mechanism: Engages a Chain-of-Thought (CoT) reasoning loop that mentally swaps demographic markers (e.g., changing "she" to "he"). It then measures the Semantic Drift in the descriptors used.

Purpose: Detects Attribute Bias (e.g., praising men for "leadership" vs. women for "support") and Institutional Gatekeeping (e.g., "culture fit" as a proxy for exclusion).

Stage 3: Contextual Grounding:

Mechanism: Validates all findings against a custom Implicit Bias Framework (Section 2.3) to differentiate between neutral operational language and systemic exclusion.

Stage 4: Validation (Optional):

Mechanism: Validates all findings against the user's prompt and furnishes the design to improve accuracy and the user's experience.

Safety & Integrity: To prevent false positives, the architecture includes a Neutrality Guardrail. If the semantic drift during the "Flip Test" is negligible (e.g., a factual business report), the system defaults to a "No Bias Detected" state, ensuring it does not hallucinate problems in safe content.

Prompt Guide for High-Value Results

To generate the most accurate and actionable insights from the Bias Detector, use prompts that challenge the system to find both explicit policy violations and subtle institutional barriers:

The "Double Standard" Prompt: "Analyze this performance review for a female employee described as 'emotional' versus a male employee described as 'passionate.' Does the system detect the gendered attribute bias? [add performance review text]"

The "Gatekeeping Audit" Prompt: "Review this job description for a 'digital native' role. Identify any coded language or proxy attributes that might create institutional ageism or exclude protected groups. [add job description text]"

The "Hidden Toxicity" Prompt: "Scan this incident report about 'urban youth' for coded racism and dehumanizing metaphors. Explain how the language might enforce systemic bias even without explicit slurs. [add incident report text]"

Explainer

Technical Insight: This interactive agent represents the second phase of my research-to-product pipeline, built directly on the findings of my Capstone, "Analyzing Hate Speech."

Leveraging Google’s experimental Opal platform, this project demonstrates a Multi-Agent Workflow that orchestrates specialized reasoning loops (System 1 + System 2). While the initial research focused on training SBERT and Random Forest models to classify high-velocity toxicity in political datasets, this implementation operationalizes those insights into a resilient, enterprise-grade Bias Intelligence System. It proves that cutting-edge, experimental agentic frameworks can be engineered to solve real-world problems like institutional bias and exclusionary language.

CFornesa

I am the Multidisciplinary Web Architect.

Inquiry

Copyright © 2025. Chris Fornesa. All rights reserved. Here's my privacy policy.